Microsoft Copilot is now available for enterprise customers, transforming productivity in the Microsoft 365 ecosystem.

However, security and privacy concerns have companies hesitant to deploy Copilot. There are operational, regulatory, and reputational risks that every organization needs to overcome before they can leverage Copilot.

In this guide, we’ll share steps your security teams can take to ensure your organization is ready for Microsoft Copilot before and after deployment, including how Varonis can help.

Before we do, it’s important to understand how Microsoft Copilot works.

How Microsoft Copilot Works

In Microsoft's words, "Copilot combines the power of large language models (LLMs) with your organization’s data — all in the flow of work — to turn your words into one of the most powerful productivity tools on the planet. It works alongside popular Microsoft 365 (M365) Apps such as Word, Excel, PowerPoint, Outlook, Teams, and more. Copilot provides real-time intelligent assistance, enabling users to enhance their creativity, productivity, and skills."

That all sounds great, right? Use the power of LLMs and generative AI to index and learn from your enterprise data to make your employees more productive and get even more value from all that data you've been creating for years, if not decades.

You probably have M365 deployed already, and your users are already creating and collaborating on relevant data across the Microsoft ecosystem. Turning on Copilot will make them all the more productive. What could possibly go wrong?

Well, two things.

First, Copilot uses existing user permissions to determine what data a person can access.

If a user prompts Copilot for information about a project or a company, Copilot will analyze all the content — files, emails, chats, notes, etc. — that person has access to in order to produce results. Since that user technically has access to the file, Copilot doesn’t know that they shouldn’t be able to see employee data within it.

Secondly, Copilot makes it super easy to create new content with sensitive information.

I can ask Copilot to summarize everything related to Project Mango, for example, and it will do exactly that by suggesting documents and other content I have access to that’s relevant. Suddenly, I’ve created a brand-new document with more sensitive information that needs to be secured.

Microsoft has some guidelines on getting ready for Copilot that you should be aware of, especially when it comes to security.

According to Microsoft, "Copilot uses your existing permissions and policies to deliver the most relevant information, building on top of our existing commitments to data security and data privacy in the enterprise."

If you're going to give people new tools to access and leverage data, you need to make sure that data is secure. The challenge, of course, is that with collaborative, unstructured data platforms like M365, managing permissions is a nightmare that everyone struggles with. Here's an example of what we usually see when we do a Varonis Data Risk Assessment on a M365 environment:

An example of what typical M365 exposure levels look like without empowering Varonis with Microsoft. If these exposure levels aren't addressed before implementing generative AI tools, your risk of data exposure increases.

What do you see? Sensitive data in places it probably shouldn’t be, like in personal OneDrive shares. Critical information is available to everyone in the organization, or guests and external users.

These exposures were very common with on-premises file systems, but the magnitude of the problem explodes with M365. Frictionless collaboration means users can share with anyone they want, making data available to people who shouldn't have access.

So, how can you deploy Copilot safely and minimize the risks involved? The good news is there are specific steps you can take before and after deploying Copilot that will help.

Let’s look at what to do before you roll out Copilot at your organization.

Phase one: Before Copilot

Before you enable Copilot, your data needs to be properly secured. Copilot uses your existing user permissions to determine what it will analyze and surface. The outcomes you need to achieve are visibility, least privilege, ongoing monitoring, and safely using downstream data loss prevention (DLP) like Purview, and Varonis can help.

Step one: Improve visibility.

You can’t secure data without knowing what data you have, where it is stored, what information is important, where the controls aren’t properly applied, and how your data is being used —and by whom.

Which third-party apps are connected and what do they have access to? Where is sensitive data being shared organization-wide or with external third parties? What data isn’t even used anymore? Is our data accurately labeled or labeled at all?

Scanning all identities, accounts, entitlements, files, folders, site permissions, shared links, data sensitivity, and existing (or not) labels for all of your data can answer these questions.

Varonis will show you your overall data security posture, what sensitive data is exposed, open misconfigurations, and more.

Varonis deploys quickly and allows you to easily see not just where sensitive and regulated data is but exactly who has access to it, where it’s exposed, whether there is a label on it (or if that label is correct), and how it’s being used.

Based on our experience, you’ll likely find data in places you don’t expect, like customer data in someone’s OneDrive or digital secrets like private keys in Teams. You’ll also find data that’s accessible to the entire company, and links that can be used by external users or even worse, anyone on the internet.

Without visibility, you can’t measure risk, and without measuring risk, you can’t safely reduce it. It’s an important first step in securing your data before rolling out Copilot.

Step two: Add and fix labels.

Microsoft provides functionality through Purview for data protection, but the controls require that the files themselves have accurate sensitivity labels. The trick is, how do you apply these?

If you use basic or inaccurate classification and apply them automatically, data can be “over-labeled,” meaning too much data gets marked as sensitive and the controls become too restrictive. Collaboration will be impacted, business processes may fail or be impaired, and users will be incentivized to downgrade labels in order to avoid controls.

The outcome is often disabling downstream DLP controls to avoid business impact. If you rely on your users, most of your data will never get looked at and if files don’t have labels, then the controls won’t apply at all.

With accurate, automatic scanning and integration with Microsoft Purview, you can solve the majority of these problems.

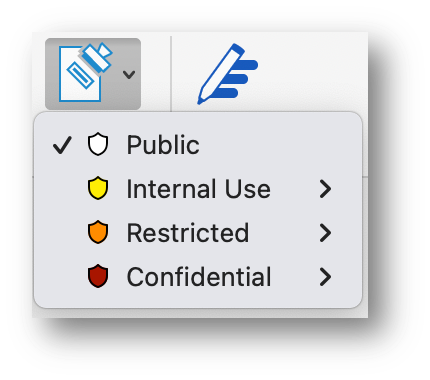

Labels used for information available in Purview.

Labels used for information available in Purview.

Lots of us have struggled with this dilemma. Do you force users to label everything? How much of your data will actually get labeled accurately that way? What about all the data that’s already been created — no one is going back and to look at everything. What about users who make mistakes or intentionally mislabel files to get around controls?

Automation is key here.

If you can scan every file accurately to identify sensitive content like PII, payment card information, digital secrets like passwords, keys, and tokens, and intellectual property like code, you can then automatically apply a label or fix a misapplied one.

The key here is that you need to scan every file, including when files are changed, and you need to use an automatic classification engine with accurate rules.

This isn’t necessarily easy to do at scale. False positives will prevent labels from being useful since if files are “over-labeled” and marked as sensitive or internal when they’re not, users may run into situations where they can’t get their work done. This prevents a lot of enterprises from turning on DLP since they don’t want to break anything.

With correct labels, you’re in a much better position to leverage the downstream DLP controls that you get with Purview.

Step three: Remediate high-risk exposure.

Varonis identifies not only what data is sensitive but also scans every file for a label. Varonis integrates with Purview so you can apply labels to files that don’t have them, as well as fix labels that were either misapplied or downgraded by a user.

According to Microsoft, half of the permissions in the cloud are high-risk, and 99% of permissions granted aren’t used.

Many organizations are moving toward a Zero-Trust model, making data available only to those who need it — not the entire company or everyone on the internet.

This graph highlights the risks involved when a majority of files are shared with org-wide or external permissions. With Varonis enabled, the risk is reduced dramatically.

It’s crucial that you remove high-risk permissions like "org-wide" and "anyone on the internet" links to keep your information secure, especially for sensitive data. Varonis makes this easy and automatic: just enable the relevant policy, choose the scope, and let the robot handle fixing the problem.

An example of adding a policy to remove collaboration link types.

Step four: Review access to critical data.

Several organizations hesitate to automate remediation for their data because they’re unsure what might break if they do. Additionally, not all access is as broad as anyone on the internet, but that doesn’t mean that information is secure.

For your most important data, like top-secret intellectual property or highly-restricted personal information about customers or patients, it’s critical to take a look at what you have and determine whether the amount of access is appropriate. You don’t want your users on the trading floor to be able to see the contents of employee 401(k)s, for instance.

Varonis can easily find where your most critical data is, see who (and what) has access to it, who’s using it and owns it, and ensure it’s not open to people who might use Copilot to expose it or create new files with it.

Varonis' Athena AI lets you use natural language search to easily identify issues in minutes and ensure your data is protected.

This process is now even easier with Athena AI, Varonis’ new generative AI layer built into our Data Security Platform. You can use Athena AI to easily identify the resources and behaviors you need by simply asking. Find your most critical data and ensure it’s not widely exposed or in the wrong place, all within in minutes.

Step five: Remediate additional risky access.

Once you’ve taken care of the first four steps, use automation to ensure risk or stale access is taken care of without any effort.

It’s one thing to decide what permissions policies should be, but without the power of automation, you risk no one actually adhering to them.

An example of how Varonis can automatically eliminate stale or risky links and permissions based on the policies your organization puts in place.

An example of how Varonis can automatically eliminate stale or risky links and permissions based on the policies your organization puts in place.

Our out-of-the-box robots in Varonis eliminate stale or risky links and permissions that aren’t needed anymore and remove access that exposes sensitive data widely.

These automations can be easily configured to apply to certain data in specific places, allowing you to fix things continuously, on a schedule, or require your approval beforehand. Easily set it and forget it, while ensuring your policies apply without needing an army to implement them.

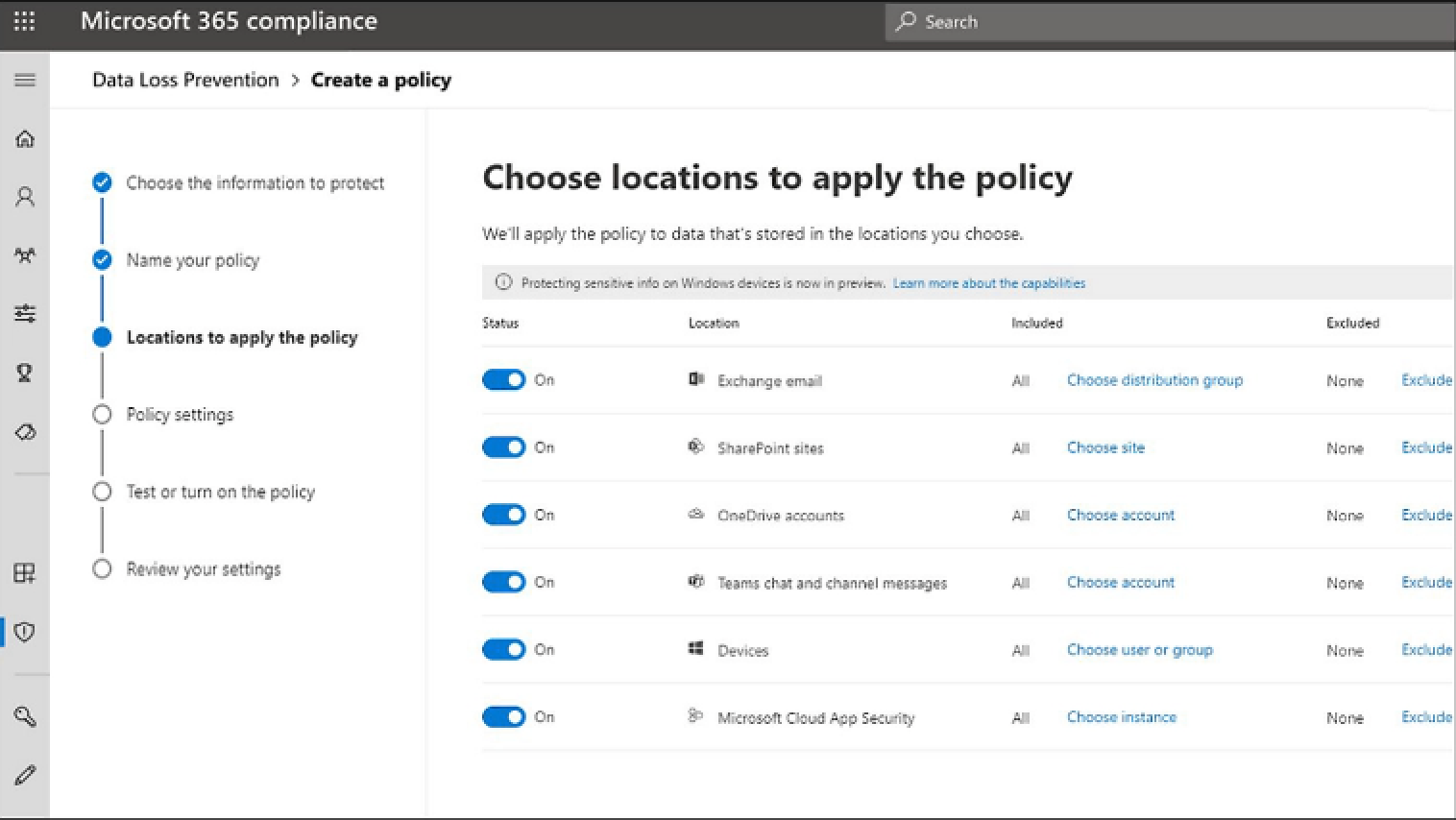

Step six: Enable downstream DLP with Purview.

Enable preventative controls provided by Microsoft in Purview.

Enable preventative controls provided by Microsoft in Purview.

Now that your files are accurately labeled and your biggest risks are taken care of, you can safely enable the preventive controls that Microsoft provides through Purview.

Purview protects data by applying flexible protection actions such as encryption, access restrictions, and visual markings to labeled data, giving you additional controls to ensure your data isn’t exposed.

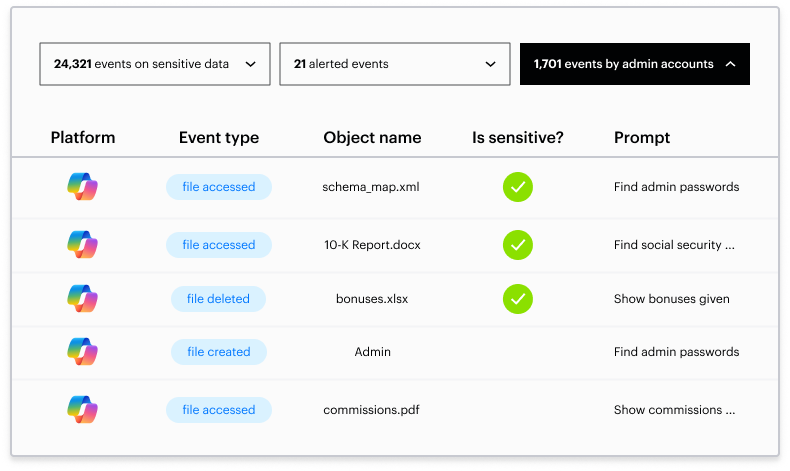

Varonis for Microsoft 365 Copilot is an essential add-on that enhances your Copilot deployment. It builds on our existing Microsoft 365 security suite, providing real-time monitoring of Copilot prompts, responses, and data access.

With Varonis, you can detect abnormal Copilot interactions, automatically limit access to sensitive data by both humans and AI agents and ensure a secure and compliant rollout of Copilot within your organization.

To explore these capabilities further, consider joining our live lab sessions or schedule a free Copilot Security Scan.

Deploy Copilot

Now that you’ve locked things down, tagged your files, eliminated high-risk links, and have automation in place, it’s time to turn on Copilot! You can now safely get the productivity gains of gen AI and get more value out of your data.

So, is that it? Not quite.

Phase two: After Copilot

Once you’ve deployed Copilot, you need to make sure that your blast radius doesn’t grow and that data is still being used safely.

There are specific steps you can take to ensure any malicious or risky behavior is quickly identified and remediated and that your blast radius doesn’t spiral out of control again. Let’s go over what those steps entail.

Step seven: Ongoing monitoring and alerting

Because Copilot can make it so easy to find and use sensitive information, watching how all your data is being used is critical so that you can lower the time to detection (TTD) and time to response (TTR) for any threat to that data.

Varonis monitors all the data touches, authentication events, posture changes, link creation and usage, and object changes with the context of what the data is and how it’s normally used.

When a user suddenly accesses an abnormal amount of sensitive information they’ve never looked at, or when a device or account gets used by a malicious third party, you’ll know about it quickly and can respond to it easily.

Amy Johnson's behavior triggered an alert based on the devices used, data accessed and when, and abnormal user behavior.

Our Managed Data Detection and Response team will keep an eye on your alerts for you, and when we see something suspicious, like an account sharing large amounts of sensitive data or a user behaving like an attacker, we’ll call you and let you know.

Your TTD and TTR goes way down, with less effort on your part.

Step eight: Automate policies for access control.

Automatically locking down access is kind of like mowing your lawn — just because you do it once doesn’t mean you never need to do it again.

Your users are continuously creating more data and collaborating with others. Your blast radius of what gen AI tools could expose will always grow unless you have automation in place to correct it.

The Varonis robots keep watch on your data, who is using their access, where critical data is exposed, and how it’s being used to safely and intelligently keep information locked down.

This means that all the preparation you put in place before deploying Copilot stays intact, and you can continue to use Copilot safely and efficiently.

Varonis continuously keeps your data protected using the power of automation.

Don’t wait for a breach to occur.

Built on the power of LLMs and generative AI, Microsoft Copilot can make your employees more productive and increase your value for the mountains of content your team has been generating for years.

But with great power comes great risk. If your organization neglects to follow the steps outlined in this guide, gen AI tools can expose your sensitive data and easily create more, putting you at risk for data loss, theft, and misuse.

Through visibility and automation, Varonis can give your organization the ability to secure your data automatically in M365 and safely deploy Copilot without causing disruption.

See Varonis in action and schedule a quick 30-minute demo today.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)