The rise of deepfake technology, from AI-generated voices to synthetic videos, is turning once-theoretical attacks into real threats. Cybercriminals are weaponizing these tools to impersonate trusted voices and faces, aiming directly at the weakest link: human trust. Security teams now face vishing calls where an executive's voice sounds genuine, or scam videos where a familiar face delivers orders.

In this blog, we’ll explore four evolving attack types enabled by deepfakes and voice clones, and how focusing on identity security can blunt their impact. We’ll examine how each threat works and show a real-world example of each. Finally, we’ll discuss how an identity-focused approach can help detect and mitigate these novel attacks before they cause damage.

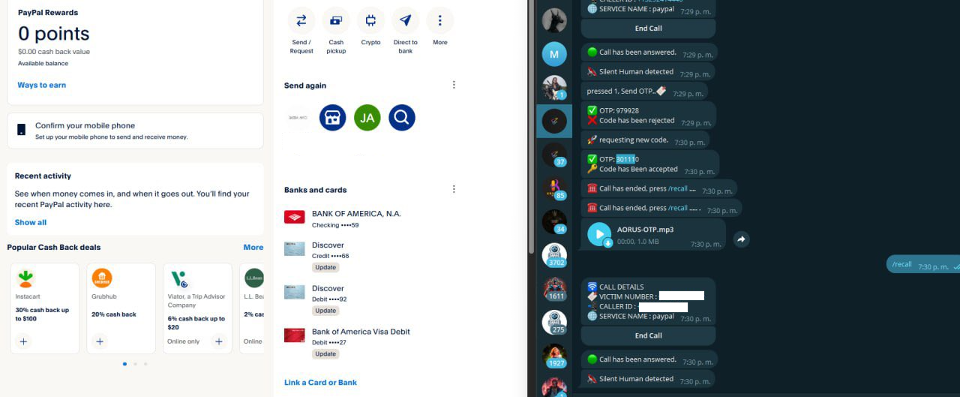

One-time password bots: cracking MFA with AI voices

One-time password (OTP) bots automate the theft of two-factor authentication (2FA) codes by combining technical tricks with social engineering.

Here’s how a typical OTP bot attack plays out:

After stealing a victim’s login credentials, the attacker tries to log in and triggers an OTP or push notification. The OTP bot then contacts the victim, often with an AI-powered voice call or spoofed text posing as the bank or service that urgently requests the code, claiming it’s needed for verification. The unsuspecting end-user, convinced by the familiar-sounding voice and timing, divulges the code, which the bot instantly uses to complete the fraudulent login.

A OTP bot attack flow using AI voice to steal 2FA codes

A OTP bot attack flow using AI voice to steal 2FA codes

The scenario above effectively punches through the 2FA barrier. OTP bots have become quite popular in the cybercrime ecosystem. They’re cheap, readily available on illicit forums and Telegram channels, and require little skill to use. Attackers have leveraged them to bypass MFA at scale, targeting thousands of bank accounts, crypto wallets, and corporate email accounts in automated sweeps. With AI voice clones, these bot calls sound more convincing than ever. A robotic fraud alert call is now a smooth human voice in the right regional accent.

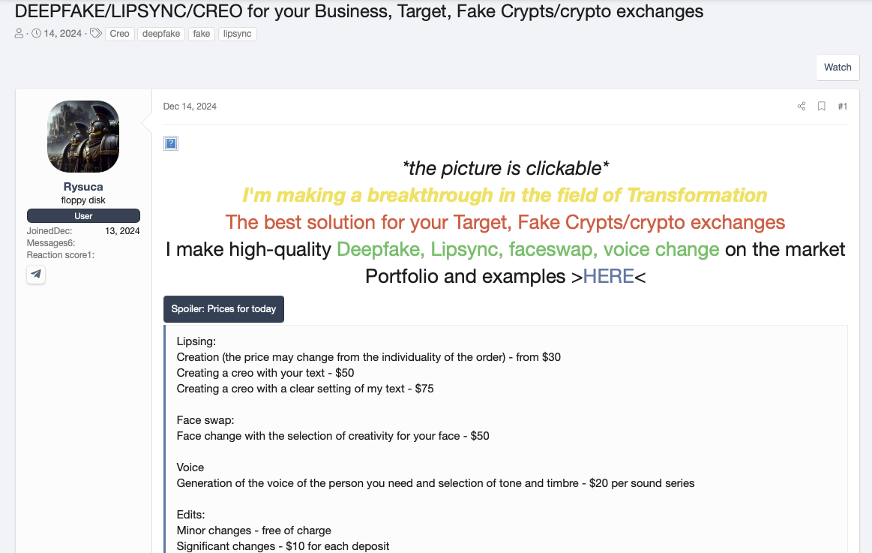

KYC bypass for banks: faking identities with deepfakes

Financial institutions rely on know your customer (KYC) checks, like photo identification and selfie videos, to verify new accounts. Deepfakes are now subverting these protections. Cybercriminals can create lifelike digital avatars of people to trick online onboarding systems that use facial recognition and liveness tests. AI can generate a video of a person turning their head and reading a prompt, matching whatever stolen ID data the attacker has. Banks or crypto exchanges, seeing a face that looks legitimate on camera, unwittingly approve the fake account.

A dark market listing for deepfake KYC bypass kits

A dark market listing for deepfake KYC bypass kits

These ready-made deepfake identity kits let scammers instantly spawn a believable persona to open and recover fraudulent bank and crypto accounts. In 2024, a large Asian financial institution discovered over 1,100 deepfake attempts to defeat its biometric KYC process for loan applications. Attackers had manipulated stolen ID photos, altering faces and lip-syncing video, to trick the bank’s facial recognition checks.

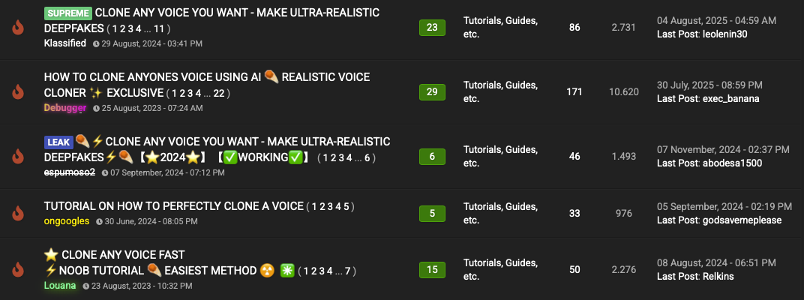

CEO fraud: impersonating executives with deepfakes

CEO impersonation fraud has been around for years via email, but AI has supercharged its effectiveness. Attackers now use voice cloning and even full video deepfakes to pose as executives or business partners, duping employees into initiating unauthorized transactions. Imagine getting a call from your CFO (you recognize their voice), urgently instructing you to wire money to a vendor. Or joining a video meeting where your CEO's face appears, discussing a confidential deal that requires immediate fund transfers. With deepfakes, these scenarios can be fabricated with alarming realism.

Forum posts advertising voice cloning for fraud

Forum posts advertising voice cloning for fraud

A string of high-profile cases confirms the danger. Back in 2019, cybercriminals first made headlines by using AI to mimic a UK company executive’s voice, tricking an employee into transferring €220,000 (US$243K) to the attackers’ account. The staff member was convinced they were speaking with their boss; the deepfake even replicated the CEO’s slight accent and tone. Fast forward to 2024, and we’ve seen even more examples: scammers deepfaked a group video call with a multinational’s Chief Financial Officer, fooling colleagues into a HK$200 million (US$25.6M) money transfer.

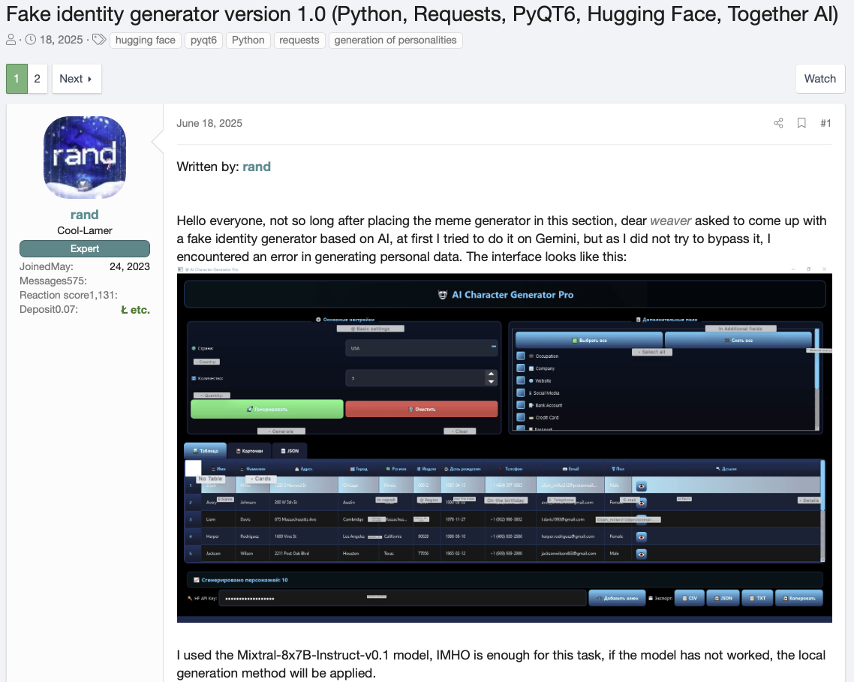

Fake hiring for insider threats: impostors as employees

Deepfakes are not only being used against employees, sometimes, they are the employees. In a problematic trend, attackers have started using AI-generated faces and voices to infiltrate companies by applying as fake job candidates. With many organizations conducting remote hiring and virtual interviews, a skilled cybercriminal can present a completely false identity over a webcam.

For example, an imposter can submit a stolen résumé under an assumed name, then use a deepfake video feed in the interview, perhaps an AI-generated face that matches the stolen ID, combined with voice-changing software. To the hiring manager, it looks and sounds like a real person. In reality, the company might be onboarding an attacker (or even a nation-state agent) directly into their systems.

A fake identity generator that can be used to pass remote hiring

A fake identity generator that can be used to pass remote hiring

In June 2022, the FBI issued a public alert about an increase in complaints of deepfake job applicants clearing the interview process. These fake candidates often target IT and developer roles that would give them access to sensitive customer data, databases, or critical infrastructure once hired.

During some interviews, observant staff noticed odd discrepancies — the applicant’s lip movements and facial expressions didn’t perfectly sync with their speech, suggesting the video was manipulated in real time. In one report, the imposter even coughed on the audio without any visible motion on camera. These clues have tipped off companies before a hire was made, but not everyone catches it in time.

Strengthening identity security

As these examples show, deepfake and voice clone attacks ultimately target identity. They succeed by impersonating or undermining the identities of people in our systems. Whether it’s a fake CFO tricking finance or a fake new hire infiltrating IT, the common weakness is the assumption that identity equals trust.

To defend against this type of threat, organizations should think about verifying and monitoring identities in more dimensions than ever before. That means looking at context and behavior: Is this really the person they claim to be, and are they doing something unusual?

One effective strategy is to correlate disparate signals about overall user activity. Some factors include things like:

- Impossible login: the same user credential logs in from New York and 10 minutes later from London (physically impossible travel).

- Off-hours or odd timestamp access: a user who never logs in on weekends suddenly accesses a system late Sunday night.

- Geographic anomalies: logins originating from countries or locations that your employee base never uses.

- Device or network mismatches: an employee’s account seen using an unfamiliar MAC address or ISP, inconsistent with their normal devices.

By mapping out a normal identity profile, you can spot when something deviates in a way that suggests an impersonation or account takeover.

This is precisely where Varonis comes into play.

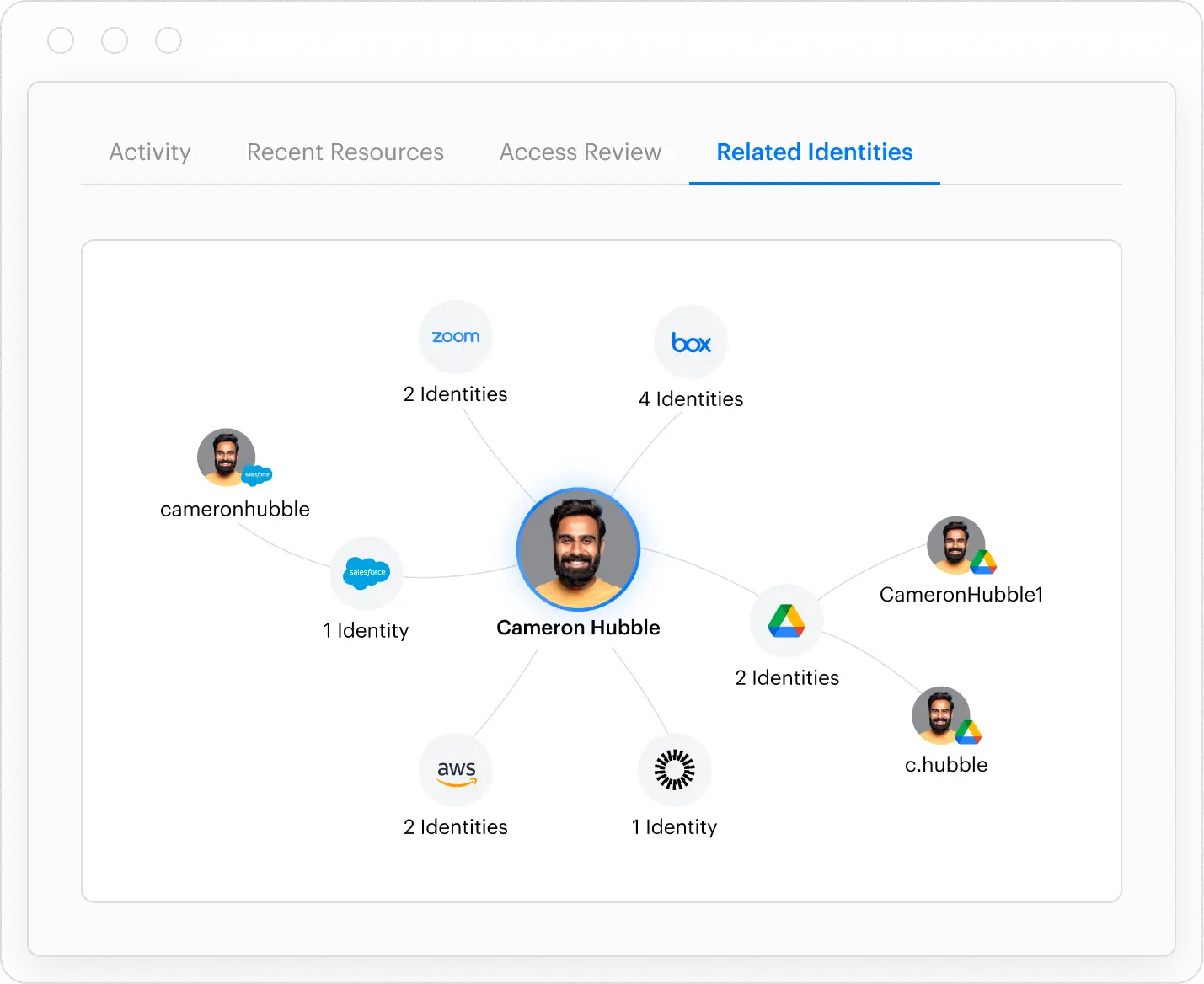

Varonis’ platform is built to map accounts across an organization’s entire ecosystem back to a single human identity, even if a user has dozens of accounts across SaaS apps, AD domains, and cloud services. It then correlates each user’s roles, group memberships, and entitlements to understand their typical access patterns and privileges. Using machine learning, Varonis classifies which accounts or access rights are high risk compared to normal user access.

Varonis’ identity threat detection capability

Varonis’ identity threat detection capability

Armed with this context, Varonis can flag suspicious activity that might indicate a deepfake or credential compromise. For example, it can catch impossible travel logins, an employee account trying to access data it has never accessed before, or a flurry of file access outside of working hours. These subtle signs often accompany an attack where the adversary, despite using a stolen or faked identity, fails to mimic the victim’s usage patterns.

When such an alert is triggered, security teams can drill down and see that User X’s identity might be compromised, and, importantly, they can respond in a coordinated way. Varonis makes it easy to immediately disable or lock down the affected user’s accounts across dozens of systems at once. This is important if, say, an attacker deepfakes their way into obtaining someone’s Okta credentials — you want to quickly revoke that identity’s access everywhere before the damage spreads.

Get started with a free assessment here: Free Data Risk Assessment | Varonis.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)