Data classification is a foundational step in protecting your organization’s information. But today’s data landscape — shaped by cloud data stores, collaboration apps, hybrid environments, and AI — requires more than pattern matching or purely AI-driven approaches. What works is combining the right classification techniques for the classification task to precisely identify sensitive data at scale.

Why data classification matters

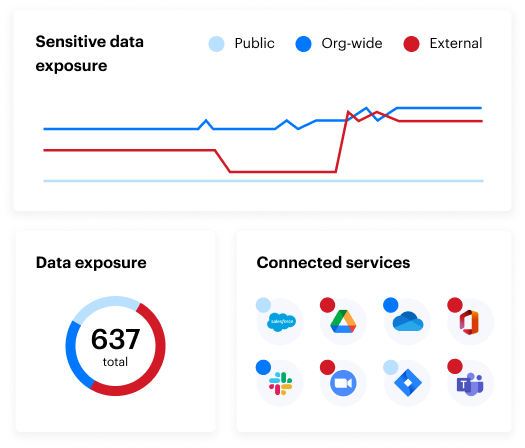

Imagine leading security for a 10,000-person organization where users create millions of resources and messages each day. Some contain critical intellectual property or personal data, and others are mundane or even intended for public consumption.

Without knowing which is which, it can be virtually impossible to prioritize risk, enforce least privilege, and comply with privacy laws. Effective data classification gives you clarity into where your sensitive data lives, who has access to it, and how it’s being used.

What is data classification?

Data classification is the process of analyzing structured, semi-structured, and unstructured data and categorizing it based on resource type, contents, and metadata. Data classification helps organizations answer important questions about their data that inform how they mitigate risk and manage data governance policies. A modern classification strategy doesn’t rely on a single technique. Instead, it blends:

- Deterministic pattern matching for known, predictable data types

- AI and machine learning for ambiguous, context‑specific, or proprietary content

- Metadata analysis (file type, owner, source system)

- Permissions and activity context to determine actual exposure

This right-tool-for-the-job approach dramatically improves accuracy, reduces false positives, and allows classification to scale across massive, constantly changing environments.

The purpose of data classification

Gartner identifies four core use cases for classification. A modern strategy strengthens each by applying the right technique for the right outcome.

Risk mitigation

- Reduce exposure of identifiable information (PII) and intellectual property (IP)

- Reduce blast radius of potential breaches

- Integrate classification into DLP and other policy-enforcing applications

Governance/Compliance

- Identify regulated data (GDPR, HIPAA, CCPA, PCI, SOX)

- Apply correct metadata tags and enable additional tracking and controls

- Enable quarantining, legal hold, archiving and other regulation-required actions

- Facilitate “Right to be Forgotten” and Data Subject Access Requests (DSARs)

Efficiency and optimization

- Identify redundant, obsolete, and trivial (ROT) data

- Enable efficient access to content based on type, usage, etc.

- Move heavily utilized data to faster devices or cloud-based infrastructure

Analytics

- Enable metadata tagging to optimize business activities

- Inform the organization on location and usage of data

Data sensitivity levels

Most organizations benefit from three levels of classification: simple enough to manage, comprehensive enough to protect.

-

High sensitivity data: Protected by law (GDPR, CCPA, HIPAA) or likely to cause severe harm if exposed. Examples: PHI, financial records, source code.

-

Medium sensitivity data: Internal‑only data where a breach would be inconvenient but not catastrophic. Examples: non‑identifiable employee data, internal drafts.

-

Low sensitivity data: Public information requiring no access restrictions. Examples: blog posts, job listings, marketing materials. Overly complex schemes create confusion and dilute adoption — keep it simple.

Organizations may use different nomenclature and may have more than three categories, depending on their use cases.

Choosing the right classification approach

Modern classification relies on a flexible, multi‑method framework that recognizes the strengths and limitations of different approaches. Instead of forcing all data into a single method, effective programs apply the right technique based on the type of data, its structure, and the desired outcome.

Pattern-based classification

Pattern-based classification is king for structured data types like credit card numbers or healthcare identifiers. Techniques like proximity matching, negative keywords, and algorithmic verification (e.g., Luhn for credit cards) deliver high precision at low compute cost.

Exact Data Match (EDM)

When record-level certainty is required (e.g., this is patient ID 22814 from our master EMR system), EDM is indispensable. It compares unstructured data to a hashed reference set, driving near-zero false positives and verifying critical data with precision.

AI/LLM-assisted classification

AI shines when there is ambiguity. It is a powerful tool for categorizing novel data types, interpreting inconsistent schemas, or adding context to classification results. Layered with pattern logic, AI raises overall precision and actionability, especially for ambiguous or evolving data.

The modern data classification process

The Varonis Data Security Platform delivers an end-to-end approach to data security for every stage of data classification.

Auto-discover data stores

Automatically map data across your estate, including cloud, SaaS, and hybrid. Hundreds of databases, thousands of buckets, and countless file shares, all continuously inventoried.

Auto-classify with the best tool for the job

Start with a comprehensive full scan to establish a baseline, using a blend of pattern-matching, Exact Data Match (EDM), and AI-assisted classification to ensure precision. Then scale efficiently with incremental scanning, leveraging native activity logs to detect changes and scan only what’s new or modified.

Enrich with context

Go beyond the data type to enrich with subject, topic, and applicable regulations. A file doesn’t just “contain PII.” It contains a “patient intake form containing HIPAA-regulated data.” That context is critical for informing actionable data security.

Monitor data activity and flows

Maintain a unified audit trail that correlates data, identity, network, and sensitivity telemetry to see how classified data is used, where it moves, and by whom, including AI prompt interactions.

Respond to abnormal behavior

Accurate classification powers DLP and risk modeling, enabling UEBA to surface complex adversary behaviors. Enrich alerts with classification context to detect exfiltration, insider threats, and AI-tool misuse. Prioritize alerts by blast radius and confidence and accelerate investigations with Varonis 24x7 MDDR.

Auto-remediate

Classification results need to translate directly into security improvements. This is where automated remediation comes in. Automatically mask sensitive data, auto-label HR files, remove risky guest access, and prevent sensitive data from entering AI prompts without creating unnecessary service tickets or complex integrations.

Make it effortless

With Varonis, there is no pre-configuration, no ongoing policy maintenance, and no manual tuning — just fast, easy deployment and immediate value.

Data classification best practices

Here are some best practices to follow as you implement and execute a data classification policy at scale.

- Use the right technique for the right data type

- Identify which compliance regulations or privacy laws apply to your organization, and build your classification plan accordingly

- Start with a narrow scope and tightly defined patterns (like PCI-DSS)

- Create custom classification rules when needed, but don’t reinvent the wheel

- Combine deterministic and AI methods for best results

- Validate classification results regularly

- Treat classification as an ongoing program, not a one-time project

Bringing it all together

Data classification is essential — but only effective when applied with the right tools for the job. When organizations combine deterministic pattern matching, AI‑accelerated understanding, metadata, and contextual insights, they can finally:

- Locate sensitive data

- Reduce exposure

- Comply with regulations

- Accelerate incident response

- Adopt AI safely and confidently

Modern data protection starts with accurate classification — and accurate classification requires a flexible, multi‑layered approach that reflects the complexity of today’s data.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)