As organizations race to integrate AI into their workflows, many overlook the harsh reality: AI is only as secure as the data that powers it.

From training models on internal documents to deploying copilots and AI assistants that interact with live systems, every AI system houses massive volumes of data — much of it sensitive, proprietary or regulated.

In this guide, you’ll learn what AI data security really entails, the unique risks it can pose to your environment and tips to confidently deploy AI and keep information safe across the entire data lifecycle.

Understanding the AI data security landscape

Let’s start by defining AI security, which encompasses protecting sensitive information across AI tools, workloads and infrastructure.

These components in the current AI landscape require dedicated security attention:

- AI copilots: Tools like Microsoft 365 Copilot that assist users with various tasks

- AI agents: Agents that rely on user permissions to execute specific actions at scale

- Large language models (LLMs): Foundational models used by AI copilots and agents that generate human-like text responses based on prompts

- AI infrastructure: The underlying systems and data stores that power AI workloads

Why do these tools require security measures? Because AI introduces several risks to business data that leaders must acknowledge, otherwise, the likelihood of a breach or data leaks is increased. Those risks include:

- Prompt injection: Attackers manipulate AI inputs to make the model perform unintended or malicious actions

- Identity compromise: Gaining unauthorized access to a user’s identity to impersonate them and access sensitive systems or data

- Insider threats: Individuals within an org who misuse their access to compromise data, maliciously or negligently

- Training data exfiltration: Unauthorized extraction of sensitive or proprietary information that was used to train an AI model, often through model queries or outputs

- Jailbreaking: A technique used to bypass an AI model’s safety controls or restrictions, enabling it to generate harmful or unauthorized content

Imagine a financial services company that implements Microsoft 365 Copilot to enhance productivity without proper security controls. Employees could inadvertently expose data by prompting the tool for “salary information” and discovering they have access to sensitive information they shouldn’t.

The core challenges of AI data security

At its core, AI security is data security. Since AI tools rely on the information you feed it, securing sensitive data before enabling AI in your org needs to be a top priority.

These are the core areas of AI orgs should address:

Controlling the AI blast radius

AI systems require access to vast amounts of data to function effectively. This creates what we like to call the blast radius, or the potential scope of damage you could face if something went wrong.

To minimize your blast radius, organizations need to:

- Gain complete visibility into sensitive data that AI systems can access or that users can easily upload in AI sessions

- Identify and revoke excessive permissions across the org that aren’t necessary for AI functionality

- Detect and fix risky AI system misconfigurations before they lead to breaches

For example, if an AI system has excessive access to sensitive customer data, a misconfiguration could expose that information in its responses or stored in ways that violate compliance requirements, making your blast radius grow exponentially.

Managing AI-generated information

AI systems don’t just consume data; they create it.

AI-generated content can also contain sensitive information that needs to be secured. It’s important to have a strategy in place to properly classify, label and monitor this data.

Here’s how organizations can manage AI-generated information:

- Implement systems to classify AI-generated content based on sensitivity

- Apply appropriate sensitivity labels to ensure proper handling

- Monitor and alert on high levels of sensitive interactions and file creation

For example, a healthcare organization can use AI to analyze patient records. The insights generated might contain PHI that requires specific security controls. Without security oversight of the AI tool, this sensitive health information is now exposed and has the potential to show up in other conversations.

Preventing sensitive data exposure in LLMs

Large language models (LLMs) also represent a particular challenge for data security.

Their ability to process and “learn” from vast amounts of information creates risks when sensitive data is inadvertently included in training data or prompts.

When securing LLMs from data risks, organizations need to:

- Discover and take inventory of all AI workloads across your environment

- Identify sensitive data flows to and from AI systems

- Map which AI accounts have access to sensitive data stores

Imagine a legal team where an attorney uses an LLM to help draft contracts. Without proper configuration, confidential client information could be included in prompts, potentially compromising attorney-client privilege.

Building a comprehensive AI security strategy

With AI’s potential to make your blast radius monumental, organizations need to address AI security challenges with a multi-faceted approach.

Create a continuous AI risk defense

Data is constantly moving and growing. The way in which users access data with AI is also constantly changing. Point-in-time assessments won’t keep up with the ever-evolving nature of data, so a core part of your data security strategy must include continuous monitoring and remediation to protect against AI-related risks.

The NIST AI Risk Management Framework (AI RMF) calls out this exact practice, stating organizations should ensure “AI risks and benefits from third-party resources are regularly monitored, and risk controls are applied and documented.”

Specifically, an effective AI security strategy includes:

- Real-time monitoring of third-party AI systems and the data they access

- Automated lockdown of sensitive data before AI breaches can occur

- Quantifiable metrics to demonstrate risk reduction over time

AI data discovery and classification

You can’t protect what you can’t see.

Comprehensive data discovery and classification capabilities are foundational to AI security. When building your strategy, keep the following in mind:

- Identify and classify both human-created and AI-generated data

- Understand data lineage — where data came from and how it flows through systems

- Ensure employees only have access to the sensitive information they need for their role

AI access intelligence

Understanding and controlling which AI systems and users can access sensitive data is critical.

Organizations can do the following to implement AI access intelligence in their strategy:

- Have bi-directional visibility into which AI-enabled users can access sensitive data

- Automatically identify and revoke stale or excessive permissions

- Ensure proper separation of duties between AI systems and sensitive data stores

Abnormal AI usage detection

Even with proper security controls in place, monitoring for abnormal AI system behavior is essential. Identity-based cyberattacks are at an all-time high, and serious consequences — like breaches — can occur if you’re not actively hunting for threats in your environment.

To detect abnormal AI usage, organizations should:

- Monitor AI prompts and responses for policy violations

- Establish behavior baselines for AI systems and users

- Generate alerts when unusual patterns are detected

Preparing for AI compliance requirements

As AI adoption accelerates, regulatory frameworks are evolving to address the associated risks we’ve mentioned.

The EU AI Act, for example, introduces new compliance requirements for organizations using AI systems and has inspired other regulations.

Organizations can keep compliance in mind when deploying AI tools by:

- Understanding emerging AI regulations in relevant jurisdictions and preparing your org to comply with them in the future

- Documenting AI system usage, including data flows and access controls

- Implementing controls that demonstrate compliance with AI-specific requirements

Multinational corporations may need to establish different controls for AI systems used in the EU versus those used in other regions, ensuring each deployment meets local regulatory requirements.

Deploying AI securely with a unified approach

Rather than addressing AI security with disconnected point solutions, a growing number of organizations are adopting unified platforms that provide comprehensive visibility and control.

This unified approach typically includes:

- Real-time visibility into your data security posture across all AI systems and data stores

- Automated prevention capabilities that continuously reduce your potential AI blast radius

- Proactive detection of threats through data-centric user and entity behavior analytics

Consider a pharmaceutical company developing new drugs with the assistance of AI. A unified security approach in this scenario would:

- Automatically discover and classify research data across all environments

- Control which AI tools can access different categories of research information

- Monitor for unusual access patterns that might indicate data theft attempts

- Automatically remediate permission issues before they lead to AI exposure

Strengthening AI security with Varonis

Organizations need deep, continuous visibility into where sensitive data lives, who can access it and how it’s being used to secure data for AI — all of which is not achievable manually.

Varonis helps organizations safeguard the data that fuels and is impacted by AI systems every second, everywhere.

Whether you’re training models on internal documents, using generative AI to assist employees or managing third-party AI tools, Varonis gives you the insight and control to secure your most valuable data assets.

Our Data Security Platform (DSP) gives organizations:

- AI data discovery and classification: Get complete, current and contextual data classification for your human and AI-generated data, no matter the size of your data store.

- AI access intelligence: Understand which user and AI accounts can access sensitive data and automatically revoke stale or excessive permissions without disruption.

- Detect abnormal AI usage: Monitor prompts and build behavior baselines for every user and device to detect when actions violate policy, behave abnormally or are compromised.

Here are more of the unique capabilities of our DSP that help you keep AI secure:

Varonis AI Security — your always-on AI defense

Varonis AI Security continuously identifies AI risks in real time, flags active violations and automatically fixes issues before they become data breaches. With quantifiable results, you, your leadership team and auditors can see what your org’s AI risk is and how it's decreasing over time.

AI Security makes intelligent decisions about which data to restrict from AI using Varonis’ patented permissions recommendation algorithms that factor in data sensitivity, staleness, user profile and more. Even if you haven’t right-sized access, AI Security has you covered.

With Varonis AI Security, customers have always-on defenses to secure AI usage, including:

- Real-time risk analysis to show you exactly which sensitive data is exposed to AI

- Automated risk remediation to continually eliminate data exposure at scale

- Behavior-based threat detection to identify abnormal or malicious behavior

- 24x7x365 alert response to investigate, contain and stop data threats with Varonis MDDR

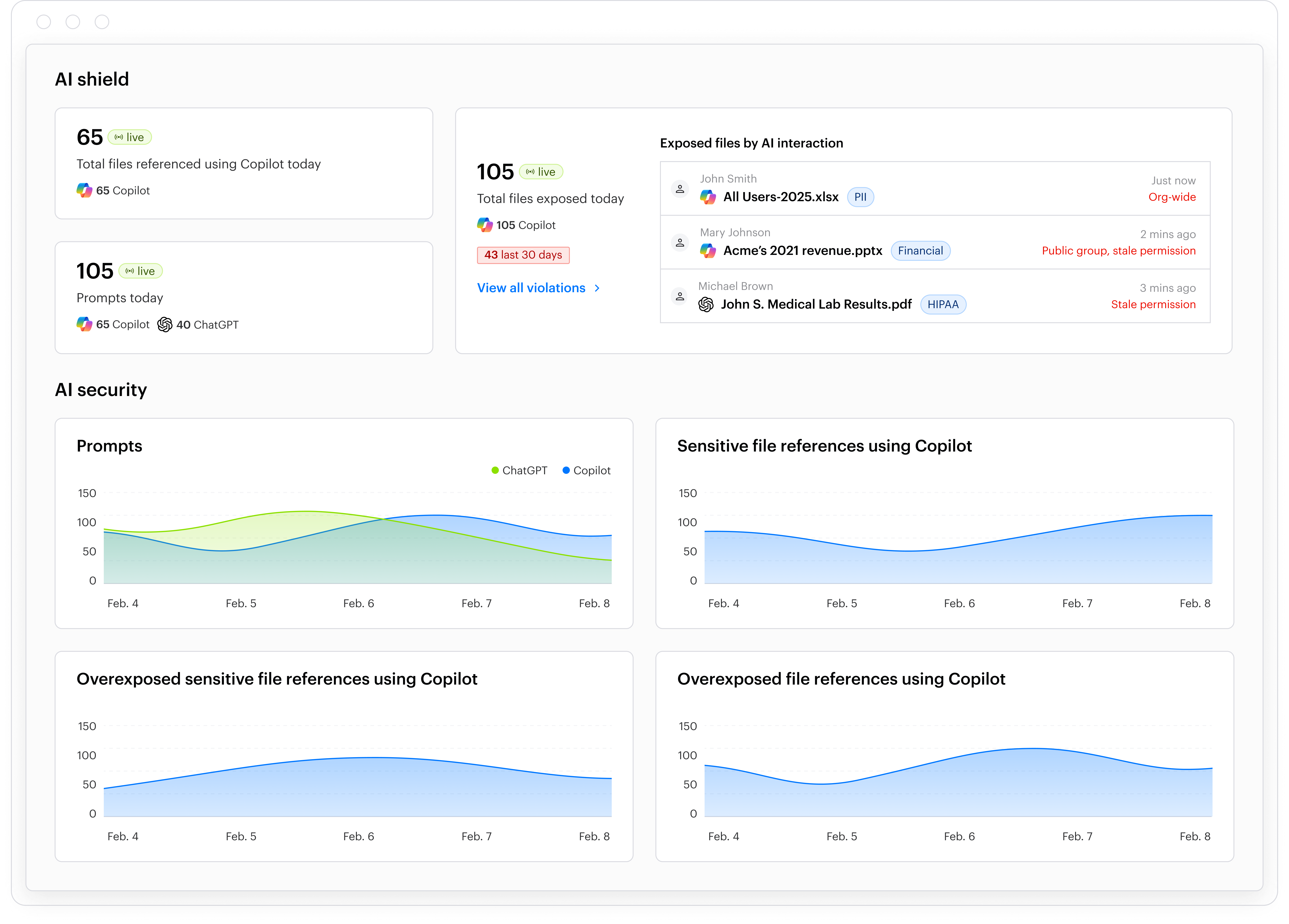

The AI Security dashboard shows your actual AI risk in real time, including policy violations and suspicious prompts.

The AI Security dashboard shows your actual AI risk in real time, including policy violations and suspicious prompts.

Athena AI

Athena AI is embedded within the Varonis Data Security Platform and helps close the skills gap while drastically speeding up security and compliance tasks.

With it, you can use natural language to conduct in-depth investigations and analyses more efficiently, transforming all your users into formidable data defenders.

Varonis for Microsoft 365 Copilot

The security model for Microsoft 365 Copilot relies on a user's existing Microsoft 365 permissions to determine which files, emails, chats, notes, etc., can be used to generate AI responses.

If permissions are not right-sized before enabling an AI tool like this in your environment, the blast radius and likelihood of a data breach is dramatically increased.

Varonis for Microsoft 365 Copilot provides essential security controls to enhance your Copilot deployment and continuously secure your data after rolling out the tool.

Key features include:

- Limiting access: Control sensitive data access to prevent unintentional exposure

- Monitoring prompts: Keep an eye on Copilot prompts in real-time

- Detecting abuse: Identify any misuse or abnormal behavior

Together, Varonis and Microsoft are committed to helping organizations confidently roll out AI while constantly assessing and improving their Microsoft 365 data security posture behind the scenes before, during and after deployment. Learn more about our partnership with Microsoft.

Quickly identify when conversations with Copilot appear malicious and surface sensitive data

Quickly identify when conversations with Copilot appear malicious and surface sensitive data

Varonis for Agentforce

Salesforce also enables AI through the use of agents. Their AI agent, Agentforce, inherits the permissions of the users who run them. So, if users have excessive access, the agent can expose sensitive data.

Limiting your blast radius, monitoring agent activity and detecting abnormal usage are key in keeping AI agents secure.

Varonis empowers security teams and Salesforce admins to identify sensitive data, right-size access to that data and prevent unauthorized activity in their entire Salesforce environments, including Agentforce, and other SaaS apps.

Investigate what Agentforce prompts result in sensitive information being surfaced and whether the files were accessed.

Investigate what Agentforce prompts result in sensitive information being surfaced and whether the files were accessed.

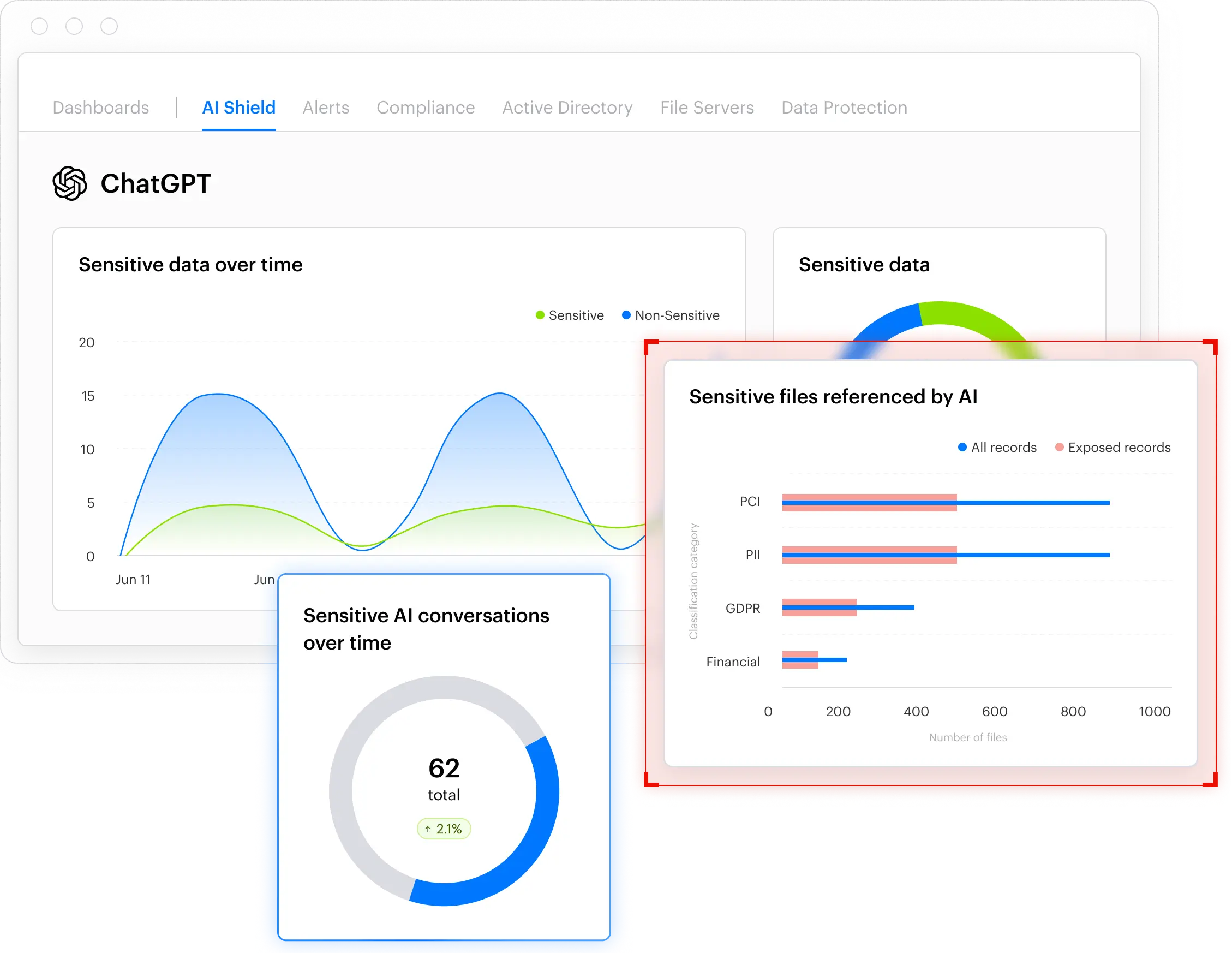

Varonis for ChatGPT Enterprise

Varonis complements ChatGPT Enterprise by adding continuous, industry-leading data security and 24x7 monitoring, helping organizations adopt AI with greater assurance.

Varonis continuously right-sizes permissions across an organization’s IT environment, limiting sensitive data from unintentionally flowing into AI applications and LLMs. With this new integration, Varonis provides additional monitoring of how users interact with ChatGPT and alerts security teams to abnormal and risky behavior.

As users copy and upload data from almost any resource, this lack of visibility can lead to overexposure and data leakage. Varonis brings ChatGPT out of the shadows.

As users copy and upload data from almost any resource, this lack of visibility can lead to overexposure and data leakage. Varonis brings ChatGPT out of the shadows.

Our platform’s coverage doesn’t stop there. Varonis can also integrate with AWS, Azure, ServiceNow, Snowflake, Databricks and more to keep your information protected wherever it lives. Explore our coverage options.

Reduce your risk without taking any.

Ready to see how Varonis can strengthen your AI data security posture?

Get started with a free AI Risk Assessment. In less than 24 hours, you’ll have a clear, risk-based view of the data that matters most and a clear path to automated remediation.

During the assessment, you’ll have full access to our platform and a dedicated IR analyst. Even if you decide not to choose Varonis, the findings from your assessment are yours to keep — no strings attached.

Request your assessment today.

Learn more about AI security

Curious how Microsoft Copilot’s permission model works? Are you looking for steps to deploy Agentforce? Perhaps you need more insight on MCP Servers?

Learn more about AI data security with our other articles:

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)