Imagine if hackers could give their scam websites a cloak of invisibility. The tech world calls this trick cloaking — showing one web page to regular people and a harmless page to the guards. That’s essentially what’s happening as cybercriminals start to leverage AI-powered cloaking services to shield phishing pages, fake stores, and malware sites from prying eyes.

In recent years, threat actors have begun leveraging the same advanced traffic-filtering tools once used in shady online advertising, using artificial intelligence and clever scripting to hide their malicious payloads from security scanners and show them only to intended victims.

This trend — essentially cloaking-as-a-service (CaaS) — is quietly reshaping how phishing and fraud infrastructure operates, even if it hasn’t yet hit mainstream headlines. In this post, we’ll explore how platforms like Hoax Tech and JS Click Cloaker are enabling this evolution, and how defenders can fight back.

The rise of AI-powered cloaking services

Cloaking isn’t new. Years ago, shady online advertisers used it to dodge site rules. In late 2024, Google’s Trust and Safety team warned that criminals were now doing the same thing – only with AI, which makes the trick much harder to spot.

By presenting a “white page” (harmless content) to automated reviews and a “black page” (the scam) to real users, fraudsters can keep malicious sites running under the radar. As Google described it, cloaking is “specifically designed to prevent moderation systems and teams from reviewing policy-violating content,” allowing scammers to deploy fake bank sites, crypto scams, and other swindles directly to users.

Behind this trend is an emerging ecosystem of cloaking-as-a-service providers. These services package advanced detection evasion techniques — JavaScript fingerprinting, device and network profiling, machine learning analysis, and dynamic content swapping — into user-friendly platforms that anyone (including criminals) can subscribe to.

Cybercriminals are effectively treating their web infrastructure with the same sophistication as their malware or phishing emails, investing in AI-driven traffic filtering to protect their scams. It’s an arms race where cloaking services help attackers control who sees what online, masking malicious activity and tailoring content per visitor in real time.

This increases the effectiveness of phishing sites, fraudulent downloads, affiliate fraud schemes, and spam campaigns, which can stay live longer and snare more victims before being detected.

The AI cloaking platforms

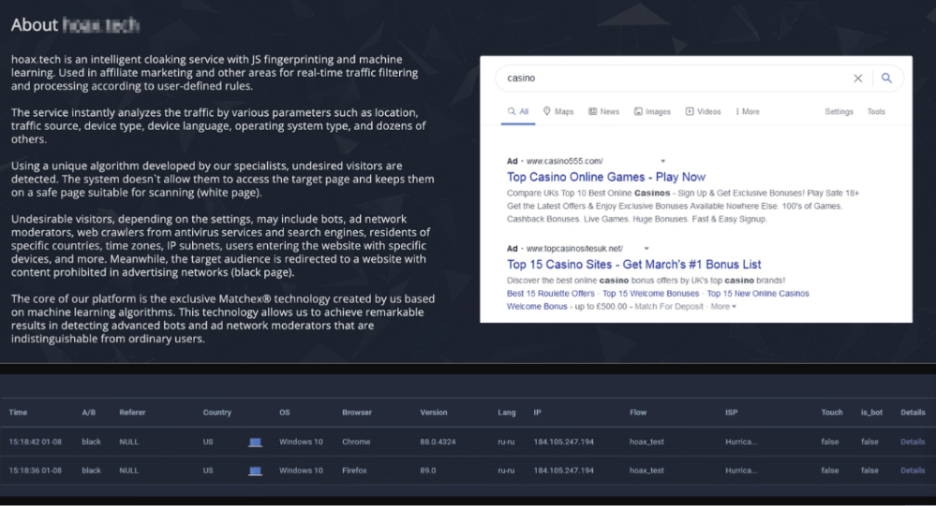

To understand how these AI cloaking systems work, let’s look at two notable platforms leading the charge — Hoax Tech and JS Click Cloaker. Both services are advertised as intelligent cloaking solutions that shield traffic and boost conversion for marketers, but the same features also make them ideal for shielding criminal infrastructure.

Hoax Tech: fingerprinting and ML in action

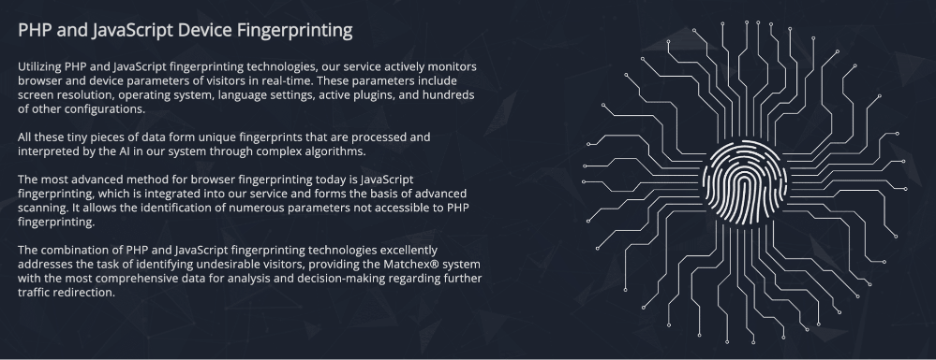

Hoax Tech is an online service that hides bad sites. It does this by reading tiny clues on a visitor’s device, a trick called JavaScript fingerprinting (a quick scan of your screen size, browser, and other digital ‘fingerprints’), and by using machine learning to spot patterns. Originally created for affiliate marketers running gray (policy-violating) offers, Hoax Tech’s technology is now being repurposed to defend phishing and scam sites from discovery. The service filters incoming web traffic in real time, examining each visitor’s digital fingerprint across hundreds of data points: location, device type, OS, browser plugins, language, IP reputation, and more.

Hoax Tech’s advertising materials

Hoax Tech’s advertising materials

Using a custom AI engine called Matchex, Hoax Tech compares these fingerprint parameters against a massive and continuously growing database to spot even subtle signs of bots or unwanted snoops. This machine learning system is self-learning, analyzing patterns from hundreds of thousands of visitors and finding common traits among advanced bots that might look innocuous in isolation. It doesn’t just rely on static rules — its Matchex AI adapts to new bot behaviors over time, making it harder for security crawlers to escape detection.

Hoax Tech’s reference to its AI engine

Hoax Tech’s reference to its AI engine

Even well-disguised crawlers and ad network moderators get flagged by this multilayered approach (JavaScript fingerprint + ML), and are served a “white page” instead of the real content. Legitimate human visitors (the intended targets) are transparently redirected to the actual malicious landing page (the “black page”) while bots see only innocuous content.

JS ClickCloaker: bulletproof traffic filtering with AI

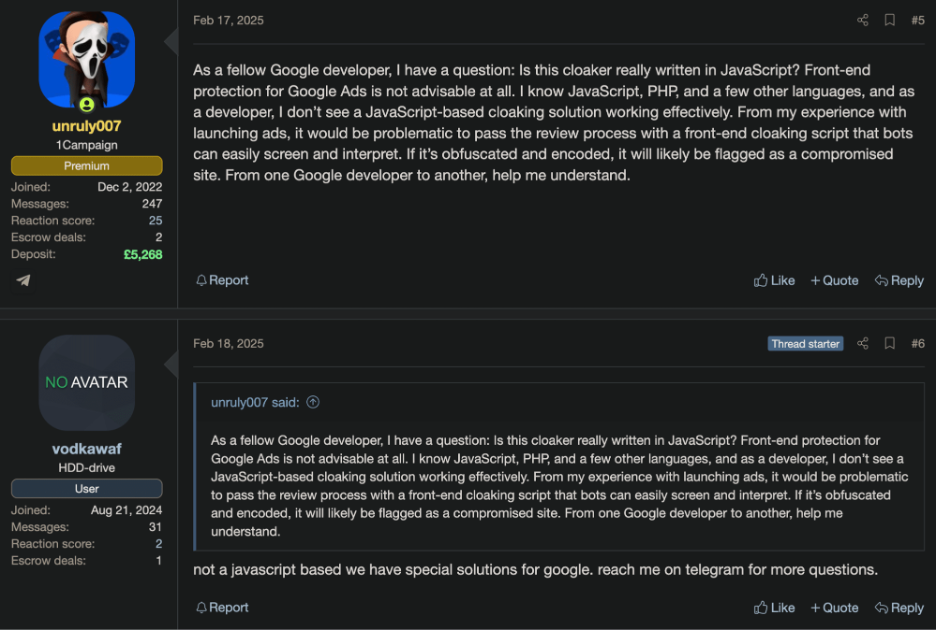

Another key tool is JS Click Cloaker, which calls itself the “#1 Traffic Security Platform” and a “bulletproof cloaker.” It’s designed for marketers and cybercriminals who want to get the most out of their efforts by blocking bots. However, despite its name, the seller on a cybercrime network said the tool doesn’t actually rely on JavaScript (JS) to work on Google.

A cybercrime network comment on the usage of JavaScript

A cybercrime network comment on the usage of JavaScript

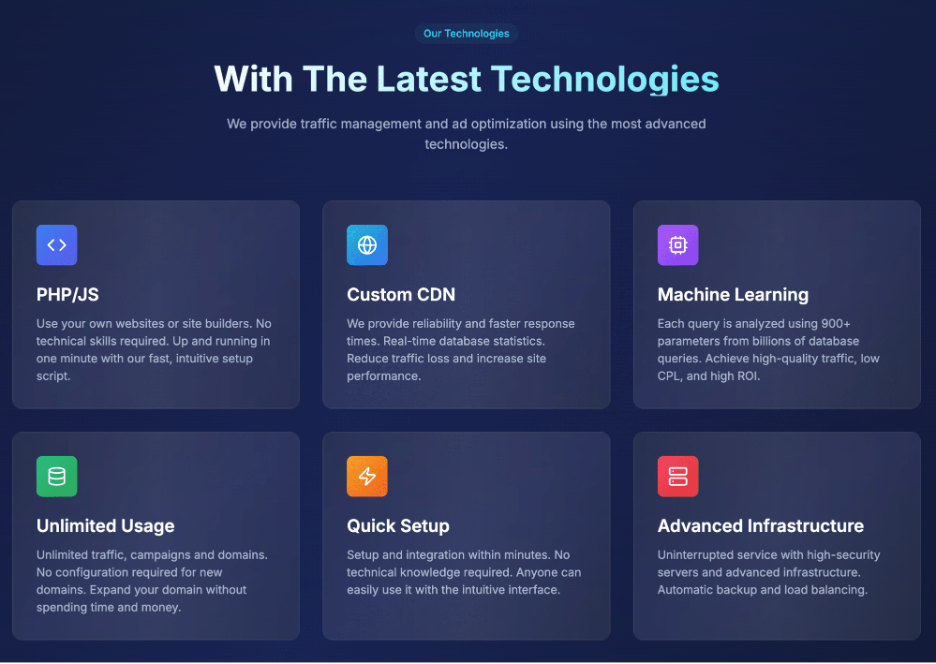

Under the hood, it allegedly leverages machine learning across 900+ parameters per visit, drawing on a huge database of historical queries. Everything from IP address and user-agent to behavioral cues can be evaluated. The service claims every click undergoes analysis against billions of data points to decide if it’s genuine or suspicious. If anything looks suspicious, like an internet address coming from a big cloud server or a headless browser (an automated browser with no screen), the system blocks or reroutes the click.

JS Click Cloaker referencing 900+ parameters

JS Click Cloaker referencing 900+ parameters

Like Hoax Tech, JS Click Cloaker allows highly granular rules: customers can filter traffic by geography, device type, referral source, time of day, and more — over hundreds of parameters — to build custom bot/no-bot criteria. It provides 100% bot protection by combining these rules with its AI detection engine. JS Click’s platform emphasizes ease of use (quick setup, no coding required) and offers features like A/B testing and traffic splitting, which indicate how professionalized these cloaking tools have become.

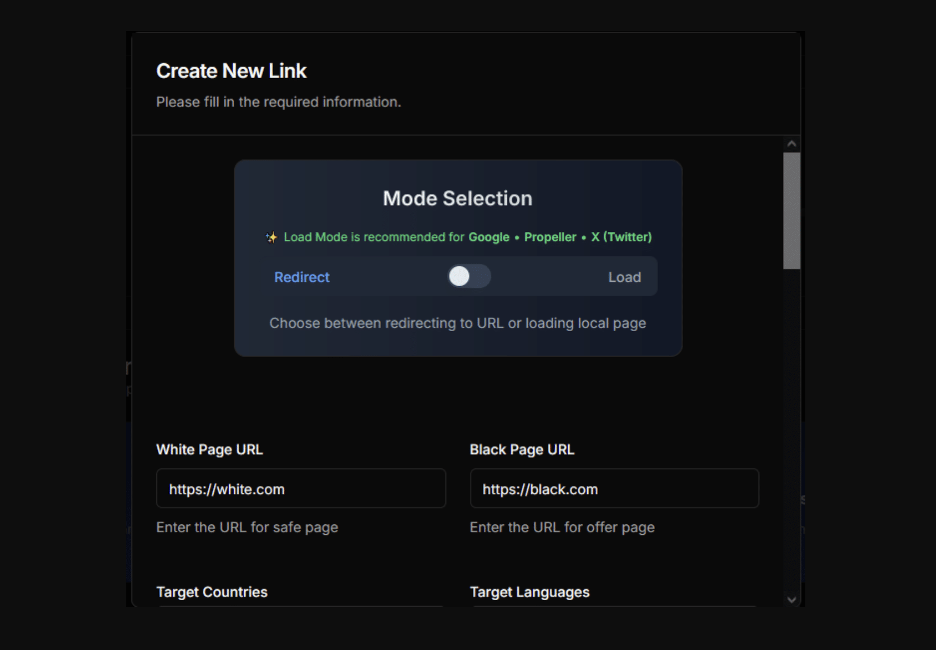

JS Click Cloaker’s “white page” and “black page” functionality

JS Click Cloaker’s “white page” and “black page” functionality

Essentially, it’s a one-stop traffic management hub: part anti-bot system, part campaign optimizer. For cybercriminals, this means they can subscribe to JS Click’s service for as little as ~$100 a month and use enterprise-grade cloaking to protect their scam pages at scale — treating phishing and malware delivery like a business operation with its own security layer.

How the deception works

At the core of these platforms is a fast decision system that filters each visitor based on how they behave. The goal is to show security scanners a clean page while delivering the real scam to human targets.

- If the visitor is flagged as a bot or unwanted (for example, a crawler, scanner, or reviewer), the system shows them a white page. This is usually a simple and safe website that looks normal and passes automated checks.

- If the visitor looks like a real person, they are redirected to the black page. This is the actual scam site, which could be a fake login page, crypto giveaway, malware prompt, or phishing form.

- From the victim’s perspective, they just landed on a regular site. They have no idea that scanners were shown a different version or that cloaking is even happening.

- Because scanners never saw the black page, the malicious site avoids detection. It stays alive longer and reaches more victims before anyone flags it for takedown.

This dual-personality delivery is extremely effective at deceiving security tools. URL scanning bots and ad network crawlers report back that the website looks clean because they only saw the white page. Meanwhile, actual victims get the scam content and potentially fall prey. It’s essentially selective camouflage, and AI-based cloaking services have elevated it to a science. By using JavaScript fingerprinting to profile devices (screen resolution, browser plugins, timezone, touch capabilities, and more) and continual machine learning analysis of what constitutes a normal vs suspicious visitor, cloakers can filter traffic with incredible granularity.

For example, they might only clicks with valid advertising IDs (like Google Ads gclid parameters) through to the black page, while blocking others. They can detect when a bot tries to masquerade as a regular browser but fails some subtle check (maybe it doesn’t properly execute a visual CAPTCHA test or loads the page too quickly), then instantly shunt that bot to the white page. The result is that phishing kits, scam sites, and even malware drop pages can evade detection by automated scanners for far longer than before. Scammers have basically added an AI-powered defensive layer to their attack chain, forcing defenders to work harder to uncover the real content.

How to uncloak malicious sites

So, how can defenders and security teams counter these cloaking techniques? It’s certainly a challenge, but not insurmountable. Forward-thinking security companies are already adapting their detection strategies to neutralize cloakers through a mix of behavioral analysis and clever scanning:

Real-time scanning

Traditional static link-scanners can be defeated by cloaking, since they only see the white page. Modern defenses use runtime analysis in virtual browsers to fully render suspicious pages and observe their behavior. Varonis, for example, can load a URL in an instrumented browser environment to trick the cloaker into thinking it’s a normal user. By doing this in real time, defenders can catch the moment the site switches content or redirects to a black page. This behavioral detection — looking at what the page does when interacted with — can expose cloaked malicious content that wouldn’t be apparent from a single GET request.

Multiple vantage points

Security teams are also learning to scan from various perspectives. That means trying the suspected URL with different fingerprints — simulating a visit from a typical user device, then from a known bad IP, then from an EU country, then from a US datacenter, etc. By comparing the results, it becomes obvious if a site is cloaking (e.g., it shows a “404 not found” to one client and a login form to another). This kind of differential analysis can trigger an alert that the site is deceptive even if the malicious content isn’t immediately seen on the first attempt.

Heuristic signals

Another approach is to look for the tell-tale signs of cloaking scripts. Cloaking services often inject characteristic JavaScript or make certain server calls (for example, loading fingerprinting libraries or checking for specific cookies/headers). Advanced threat detection tools can flag these indicators. If a page’s code is heavily focused on fingerprinting the user (collecting unusual amounts of environment data) and contains logic to swap content, that’s a big red flag of cloaking. Even if it can't be easily bypassed, it can be assumed that the site is suspicious.

Varonis analyzes behavior in real-time by running virtual browsers to check web page content and server responses, giving clear results about phishing threats. It’s AI fighting AI — Varonis uses machine learning and automation to combat attackers who use their own AI tools to hide threats.

Interested in learning how to secure your environment? Click here to schedule a Data Risk Assessment.

What should I do now?

Below are three ways you can continue your journey to reduce data risk at your company:

Schedule a demo with us to see Varonis in action. We'll personalize the session to your org's data security needs and answer any questions.

See a sample of our Data Risk Assessment and learn the risks that could be lingering in your environment. Varonis' DRA is completely free and offers a clear path to automated remediation.

Follow us on LinkedIn, YouTube, and X (Twitter) for bite-sized insights on all things data security, including DSPM, threat detection, AI security, and more.

-1.png)