Phishing remains one of the most successful ways to infiltrate an organization. We’ve seen a massive amount of malware infections stemming from users opening infected attachments or clicking links that send them to malicious sites that try to compromise vulnerable browsers or plugins.

Now, with organizations moving to Microsoft 365 at such a rapid pace, we’re seeing a new attack vector: Azure Applications.

Is your Office 365 and Teams data as secure as it could be? Find out with our Free Video Course.

As you’ll see below, attackers can create, disguise, and deploy malicious Azure apps to use in their phishing campaigns. Azure apps don’t require approval from Microsoft and, more importantly, they don’t require code execution on the user’s machine, making it easy to evade endpoint detection and A/V.

Once the attacker convinces the victim to click-to-install malicious Azure apps, they can map the user’s organization, gain access to the victim’s files, read their emails, send emails on their behalf (great for internal spear phishing), and a whole lot more.

What are Azure Applications?

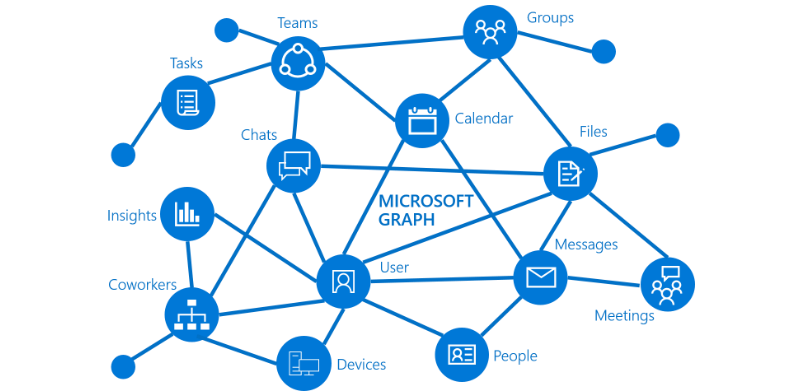

Microsoft created the Azure App Service to give users the ability to create custom cloud applications that can easily call and consume Azure APIs and resources, making it easy to build powerful, customizable programs that integrate with the Microsoft 365 ecosystem.

One of the more common Azure APIs is the MS Graph API. This API allows apps to interact with the user’s 365 environment—including users, groups, OneDrive documents, Exchange Online mailboxes, and conversations.

Similar to how your iOS phone will ask you if it’s OK for an app to access your contacts or location, the Azure app authorization process will ask the user to grant the app access to the resources it needs. A clever attacker can use this opportunity to trick a user into giving their malicious app access to one or more sensitive cloud resources.

How the Attack Works

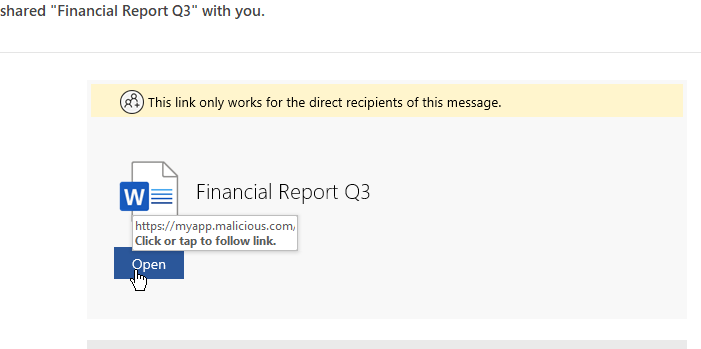

To perform this attack, the adversary must have a web application and an Azure tenant to host it. Once we set up shop, we can send a phishing campaign with a link to install the Azure app:

The link in the email directs the user to our attacker-controlled website (e.g., https://myapp.malicious.com) which seamlessly redirects the victim to Microsoft’s login page. The authentication flow is handled entirely by Microsoft, so using multi-factor authentication isn’t a viable mitigation.

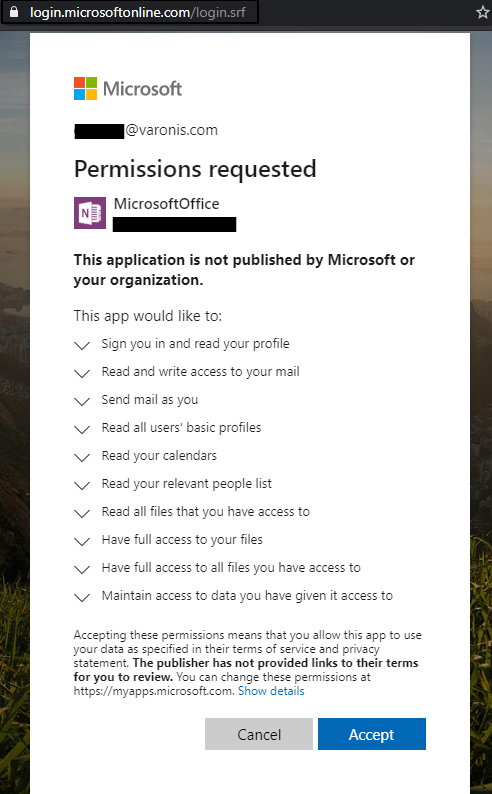

Once the user logs into their O365 instance, a token will be generated for our malicious app and the user will be prompted to authorize and give it the permissions it needs. This is how it looks to the end-user (and should look very familiar if they install apps in SharePoint or Teams):

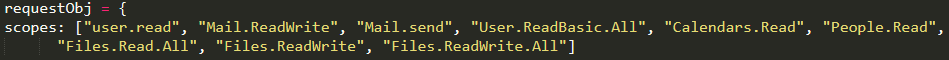

On the attacker’s side, here are the MS Graph API permissions that we’re requesting in our app’s code:

As you can see, the attacker has control over the application’s name (we made ours “MicrosoftOffice”) and the icon (we used the OneNote icon). The URL is a valid Microsoft URL and the certificate is valid.

Under the application’s name, however, is the name of the attacker’s tenant and a warning message, neither of which can be hidden. An attacker’s hope is that a user will be in a rush, see the familiar icon, and move through this screen as quickly and thoughtlessly as they’d move through a terms of service notice.

By clicking “Accept”, the victim grants our application the permissions on behalf of their user—i.e., the application can read the victim’s emails and access any files they have access to.

This step is the only one that requires the victim’s consent — from this point forward, the attacker has complete control over the user’s account and resources.

After granting consent to the application, the victim will be redirected to a website of our choice. A nice trick can be to map the user’s recent file access and redirect them to an internal SharePoint document so the redirection is less suspicious.

Post-Intrusion Possibilities

This attack is fantastic for:

- Reconnaissance (enumerating users, groups, objects in the user’s 365 tenant)

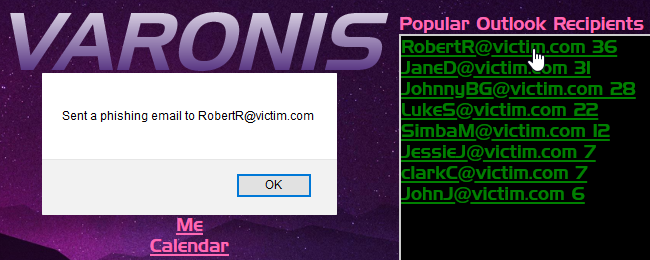

- Spear phishing (internal-to-internal)

- Stealing files and emails from Office 365

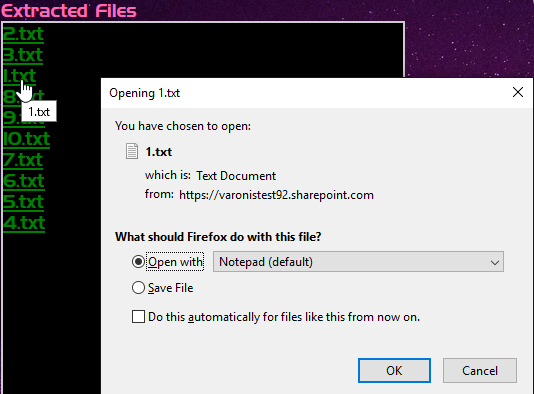

To illustrate the power of our Azure app, we made a fun console to display the resources we gained access to in our proof-of-concept:

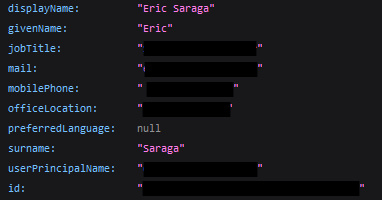

The Me option shows the victim we phished:

Users will show us the above metadata for every single user in the organization, including their email addresses, mobile numbers, job titles, and more depending on the organization’s Active Directory attributes. This API call alone could trigger a massive PII violation, especially under GDPR and CCPA.

The Calendar option shows us the victim’s calendar events. We can also set up meetings on their behalf, view existing meetings, and even free up time in their day by deleting meetings they set in the future.

Perhaps the most critical function in our console app is the Recent Files function that lets us see any file the user accessed in OneDrive or SharePoint. We can also download or modify files (malicious macros for persistence, anyone?).

IMPORTANT: When we access a file via this API, Azure generates a unique link. This link is accessible by anyone from any location—even if the organization does not allow anonymous sharing links for normal 365 users.

API links are special. We’re honestly not sure why they aren’t blocked by the organization’s sharing link policy, but it could be that Microsoft doesn’t want to break custom apps if the policy changes. An application can request a download link or a modify link for a file —in our PoC we requested both.

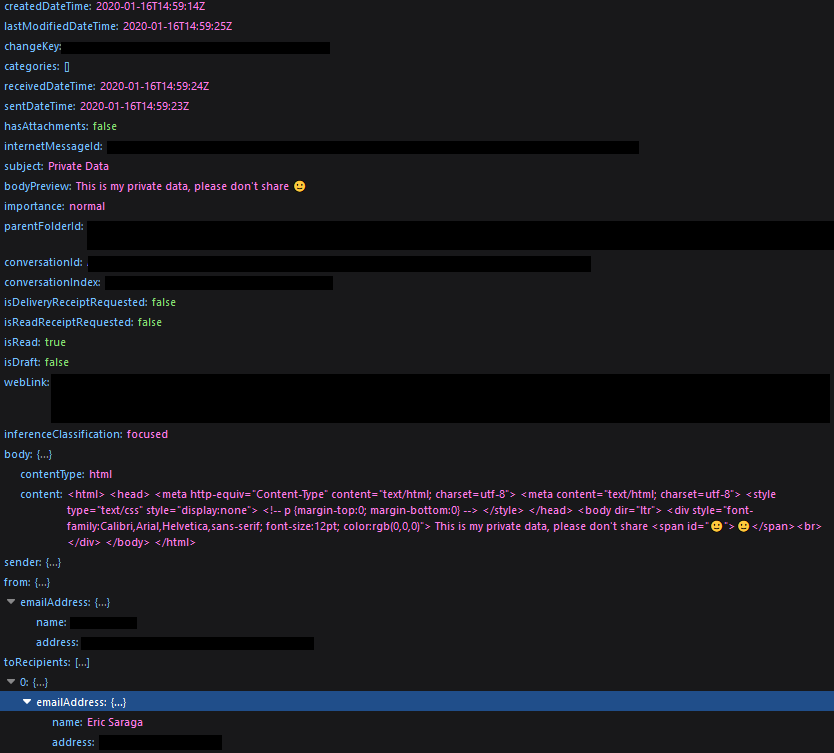

The Outlook option gives us complete access to our victim’s email. We can see the recipients of any message, filter by high priority emails, send emails (i.e., spear phish other users), and more.

By reading the user’s emails, we can identify the most common and vulnerable contacts, send internal spear-phishing emails that come from our victim, and infect his peers. We can also use the victim’s email account to exfiltrate data that we find in 365.

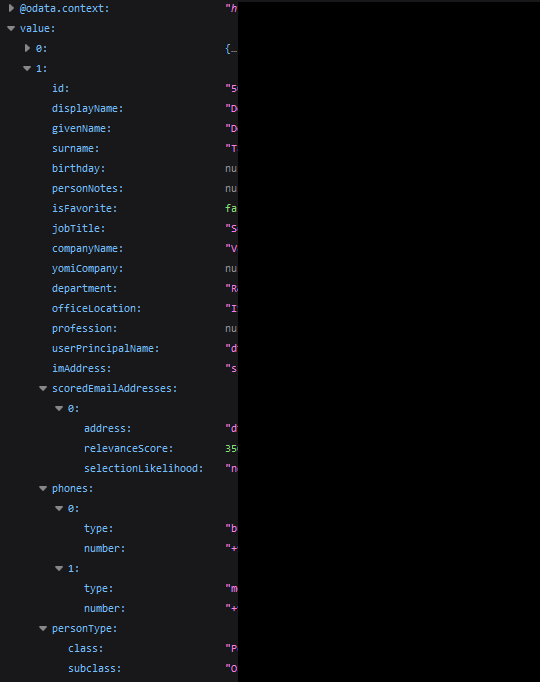

Moreover, Microsoft also provides insights about the victim’s peers using the API. The peer data could be used to pinpoint other users that the victim had the most interaction with:

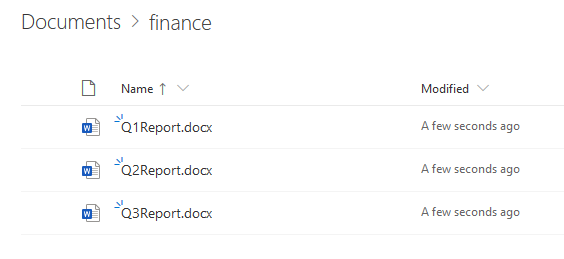

As we mentioned above, we can modify the user’s files with the right permissions. Hmm, what could we possibly do with a file modification API?? 😊

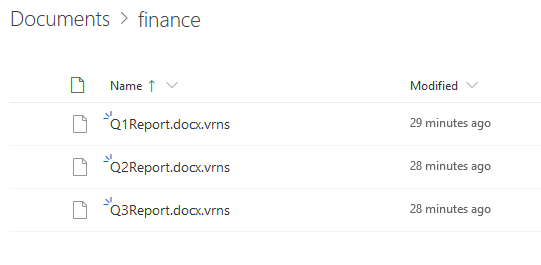

One option is to turn our malicious Azure app into ransomware that remotely encrypts files that the user has access to on SharePoint and OneDrive:

Although this method of encrypting files is not foolproof, as some files might be recoverable through stricter backup settings, tenants with default configurations could risk losing data permanently. But again, we could always exfiltrate sensitive data and threaten to leak it publicly unless our ransom is paid.

Other resources:

- Kevin Mitnick presented a similar method of cloud encryption that affects your inbox mails.

- Krebs on Security also wrote a nice blog post on this attack.

Detections & Mitigations

This phishing technique has relatively low awareness today. Many defenders aren’t familiar with the level of damage that an attacker can cause by a single victim granting access to a malicious Azure app. Granting consent to an Azure application is not all that different then running a malicious exe or allowing macros to activate in a document from an unknown sender—but because it’s a newer vector that doesn’t require executing code on the endpoint it’s harder to detect and block.

So, what about disabling third party applications all together?

According to Microsoft, disallowing users to grant consent to applications is not recommended:

“You can turn integrated applications off for your tenancy. This is a drastic step that disables the ability of end-users to grant consent on a tenant-wide basis. This prevents your users from inadvertently granting access to a malicious application. This isn’t strongly recommended as it severely impairs your users’ ability to be productive with third-party applications.”

How do we detect Azure app abuse?

The easiest way to detect illicit consent grants is by monitoring the consent events in Azure AD, and by regularly reviewing your “Enterprise Applications” in the Azure portal.

Ask yourself these questions:

- Do I know this application?

- Is it verified by an organization I trust?

To verify you’re running the most recent version of DatAlert and have the correct threat models enabled, please reach out to your account team or Varonis support.

How do we remove malicious apps?

Using the Azure portal, go to “Enterprise Applications” in the “Azure Active Directory” tab and delete applications. A regular user can also remove access by browsing to https://myapps.microsoft.com, verifying the apps listed there, and revoking privileges as necessary.

For detailed instructions see: https://docs.microsoft.com/en-us/microsoft-365/security/office-365-security/detect-and-remediate-illicit-consent-grants

Meet Our IR & Forensics Teams

If you have any questions about this attack or want to have an office hours session with our incident response and forensics teams to see what else they’re seeing in the field, simply reach out and we’ll set you up.

What you should do now

Below are three ways we can help you begin your journey to reducing data risk at your company:

- Schedule a demo session with us, where we can show you around, answer your questions, and help you see if Varonis is right for you.

- Download our free report and learn the risks associated with SaaS data exposure.

- Share this blog post with someone you know who'd enjoy reading it. Share it with them via email, LinkedIn, Reddit, or Facebook.